Your Credentials

To determine your credentials in Azure:

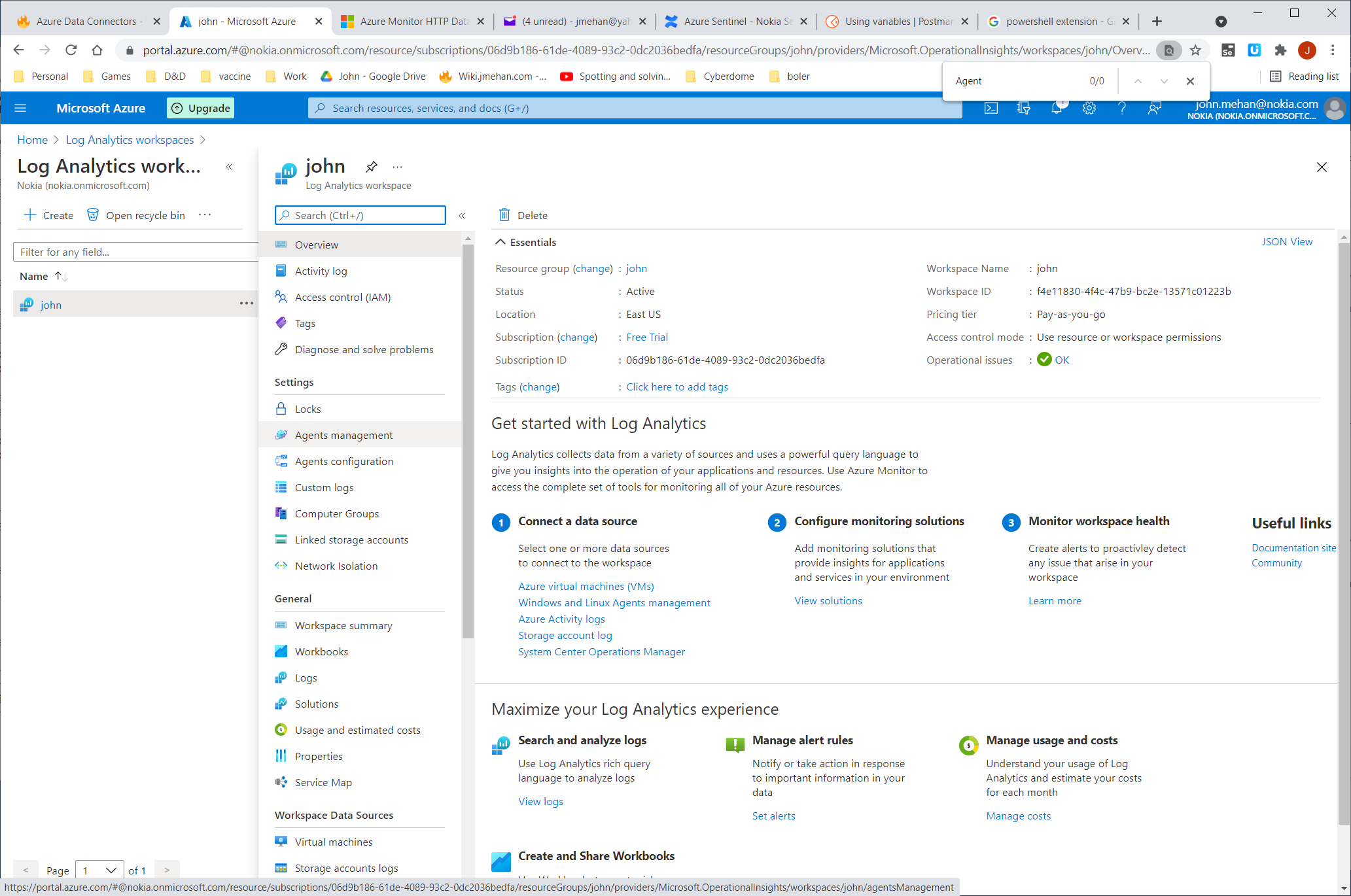

- locate your Log Analytics workspace.

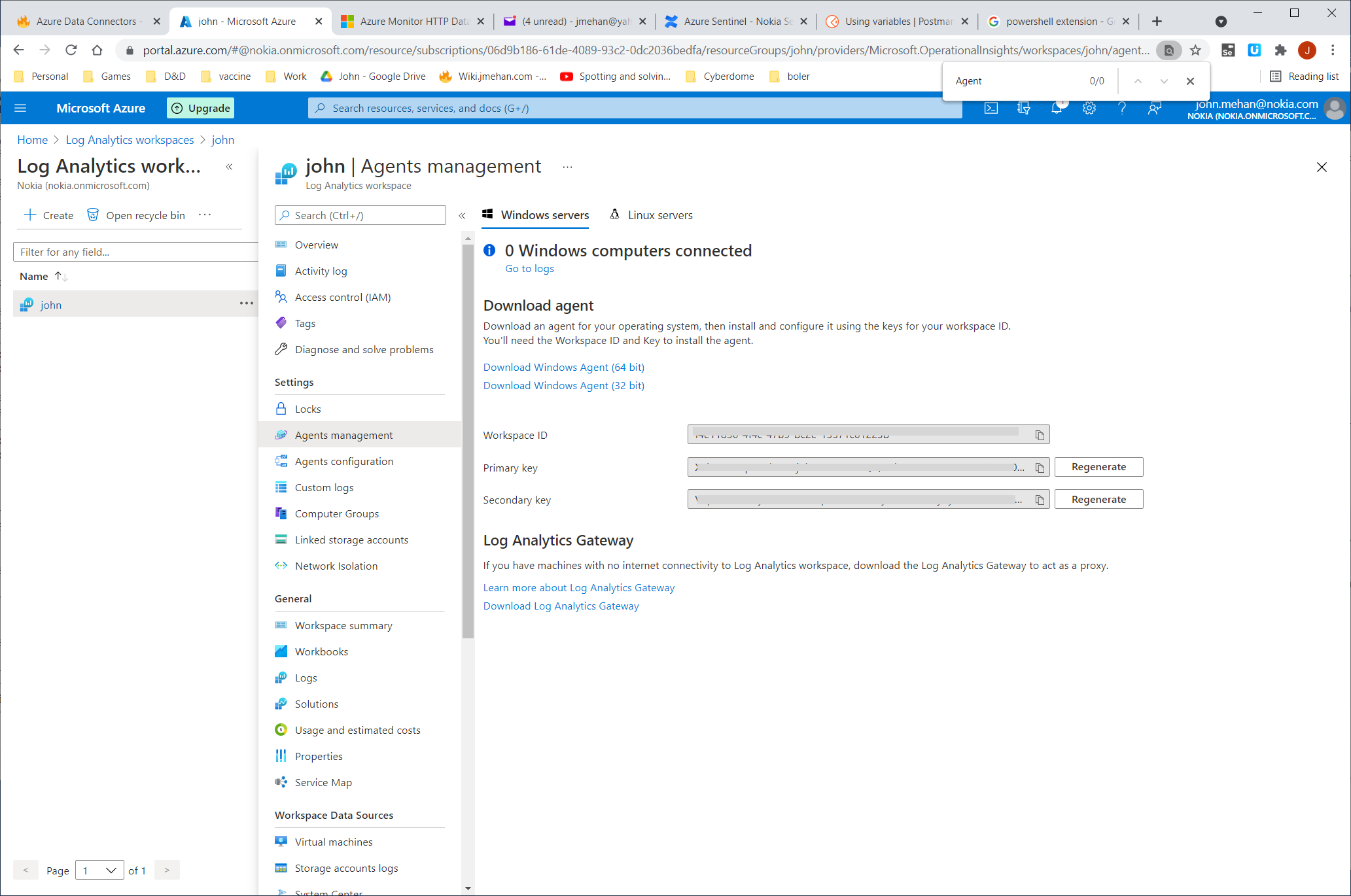

- Select Agents management.

- To the right of Workspace ID, select the copy icon, and then paste the ID as the value of the Customer ID variable.

- To the right of Primary Key, select the copy icon, and then paste the ID as the value of the Shared Key variable.

Azure HTTP Data Collector API

https://docs.microsoft.com/en-us/azure/azure-monitor/logs/data-collector-api

Authorization Header

Any request to the Azure Monitor HTTP Data Collector API must include an authorization header. To authenticate a request, you must sign the request with either the primary or the secondary key for the workspace that is making the request. Then, pass that signature as part of the request.

Here's the format for the authorization header:

Authorization: SharedKey <WorkspaceID>:<Signature>

WorkspaceID is the unique identifier for the Log Analytics workspace. Signature is a Hash-based Message Authentication Code (HMAC) that is constructed from the request and then computed by using the SHA256 algorithm. Then, you encode it by using Base64 encoding.

Use this format to encode the SharedKey signature string:

StringToSign = VERB + "\n" +

Content-Length + "\n" +

Content-Type + "\n" +

"x-ms-date:" + x-ms-date + "\n" +

"/api/logs";

Here's an example of a signature string:

POST\n1024\napplication/json\nx-ms-date:Mon, 04 Apr 2016 08:00:00 GMT\n/api/logs

When you have the signature string, encode it by using the HMAC-SHA256 algorithm on the UTF-8-encoded string, and then encode the result as Base64. Use this format:

Signature=Base64(HMAC-SHA256(UTF8(StringToSign)))

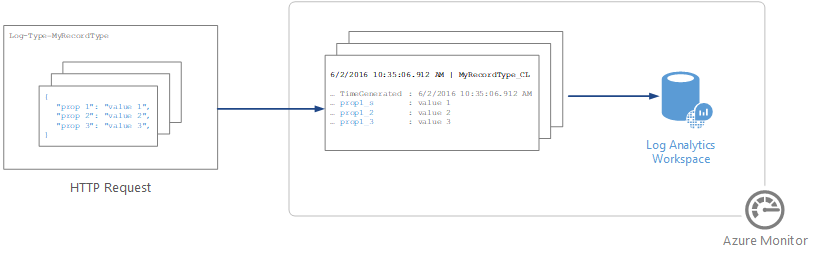

Request Body

The body of the message must be in JSON.

It must include one or more records with the property name and value pairs in the following format. The property name can only contain letters, numbers, and underscore (_).

JSON

[

{

"property 1": "value1",

"property 2": "value2",

"property 3": "value3",

"property 4": "value4"

}

]

Sample Script/Program

Sample powershell script to push data to your workspace.

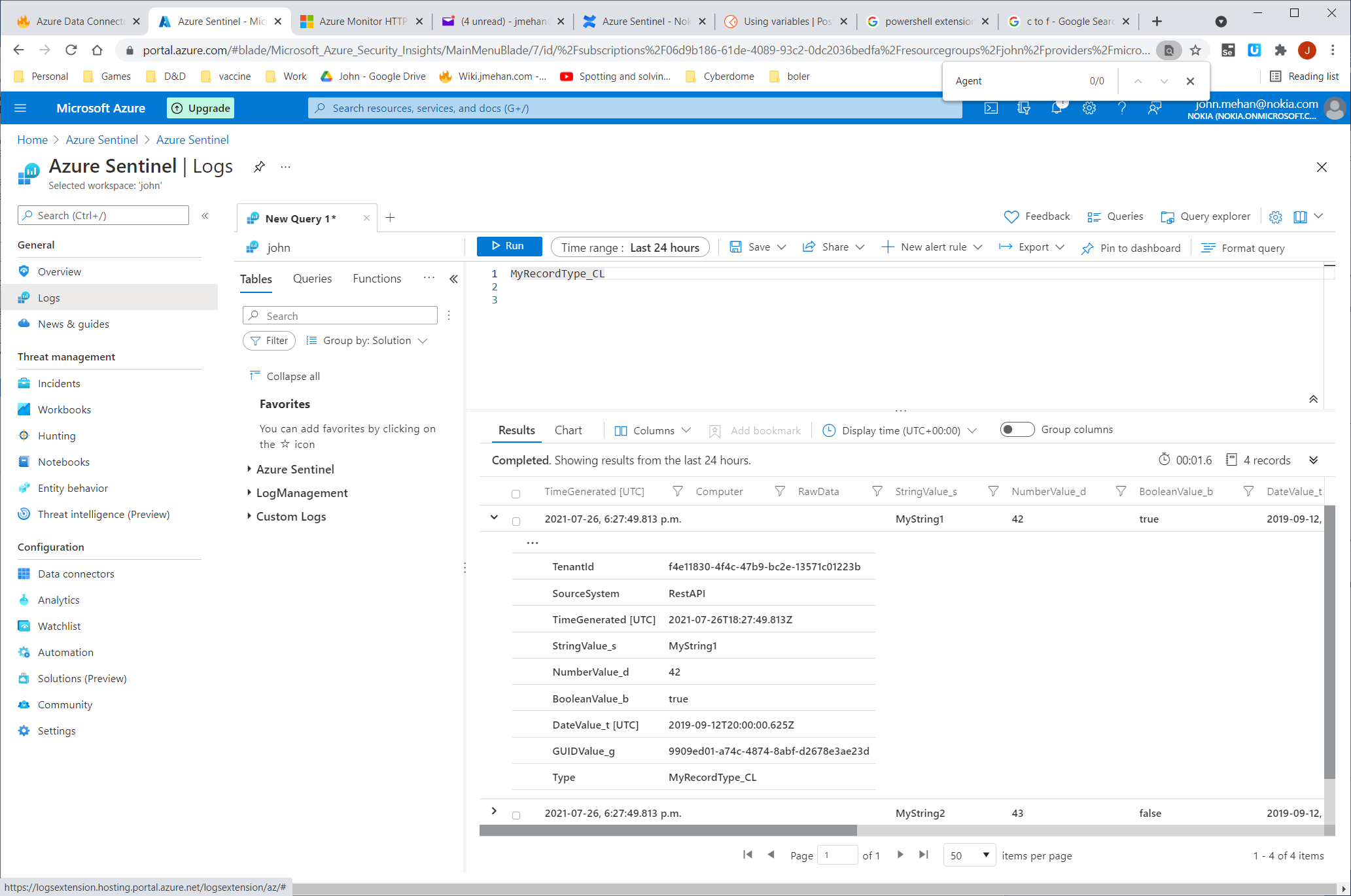

LogType: MyRecordType_CL

Querying Submitted Data

Azure Arc

https://docs.microsoft.com/en-us/azure/architecture/hybrid/arc-hybrid-kubernetes

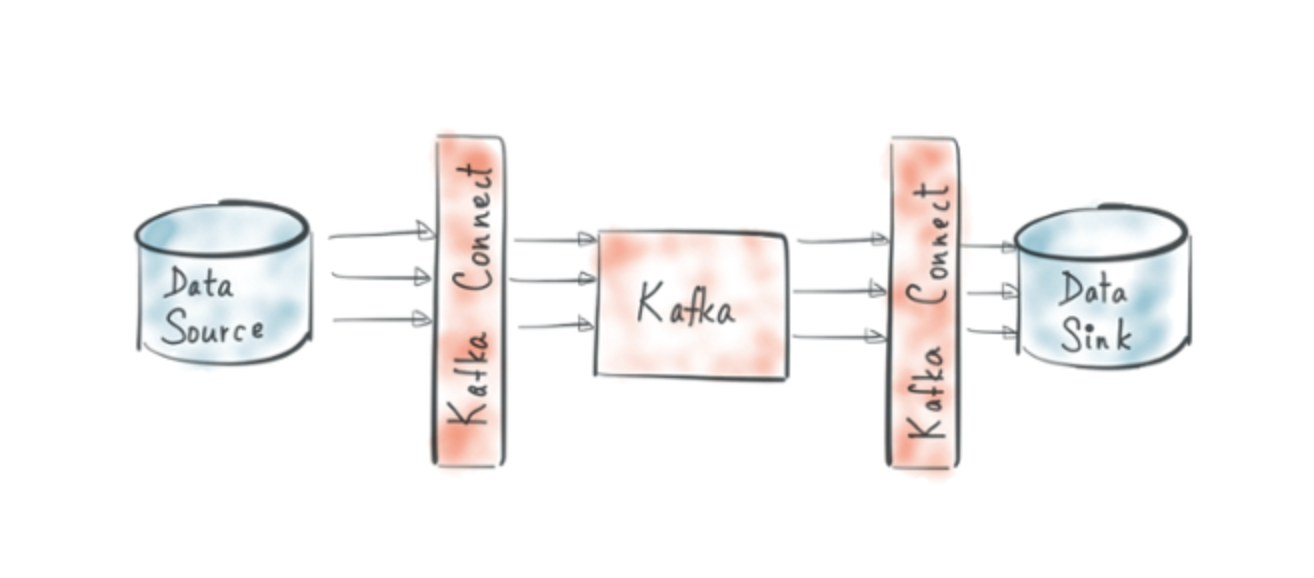

Kafka Connect with Azure Log Analytics Sink Connector

https://www.confluent.de/hub/chaitalisagesh/kafka-connect-log-analytics

Log Analytics Agent for Linux

https://docs.microsoft.com/en-us/azure/azure-monitor/agents/agent-linux

Pushes data to Azure Data Collector API.

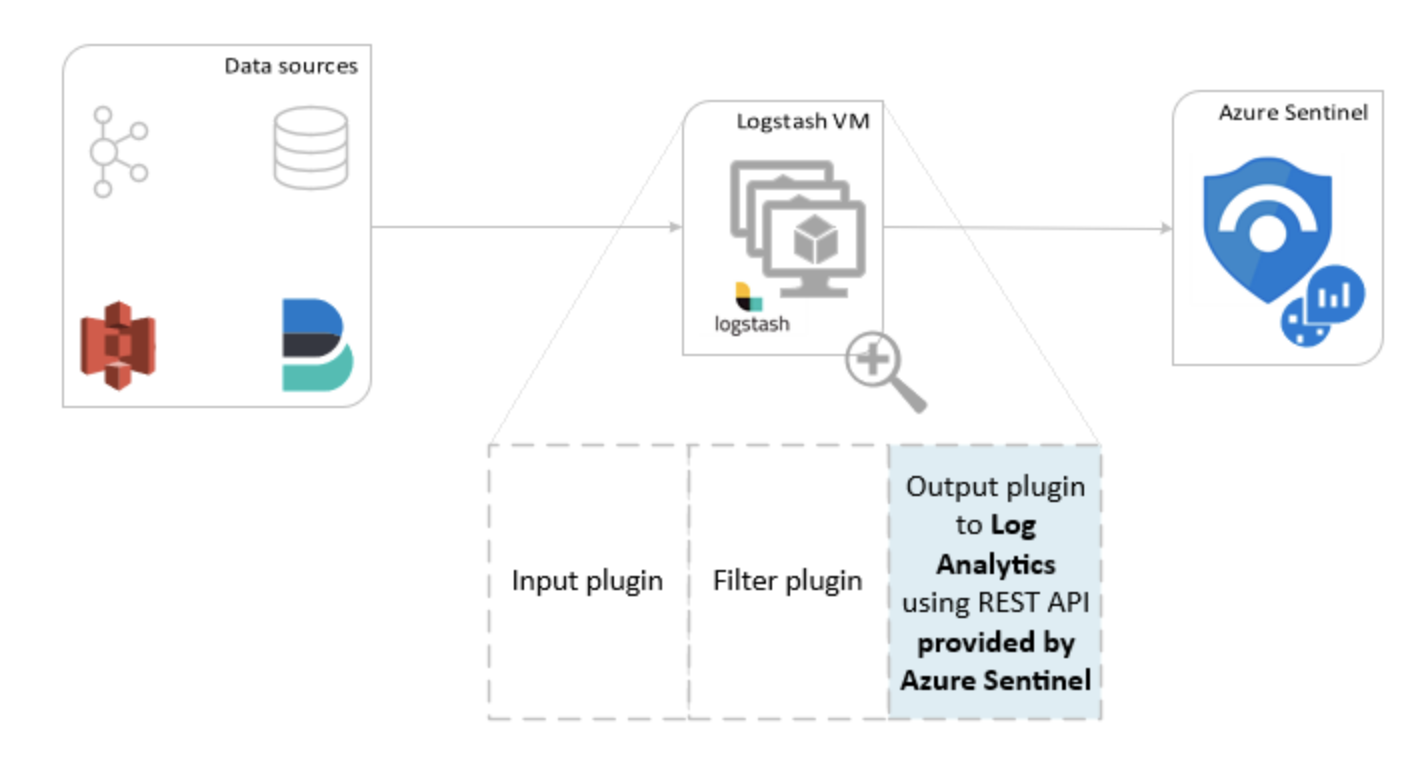

Logstash

https://docs.microsoft.com/en-us/azure/sentinel/connect-logstash

Pushes data to Azure Data Collector API.

"The components for log parsing are different per logging tool. Fluentd uses standard built-in parsers (JSON, regex, csv etc.) and Logstash uses plugins for this. This makes Fluentd favorable over Logstash, because it does not need extra plugins installed, making the architecture more complex and more prone to errors"

Fluent-bit

https://docs.fluentbit.io/manual/pipeline/outputs/azure

Pushes data to Azure Data Collector API.

References

| Reference | URL |

|---|---|

| Azure HTTP Data Collector API | https://docs.microsoft.com/en-us/azure/azure-monitor/logs/data-collector-api |

| Azure Log Analytics Sink Connector | https://www.confluent.de/hub/chaitalisagesh/kafka-connect-log-analytics |

| Log Analytics Agent for Linux | https://docs.microsoft.com/en-us/azure/azure-monitor/agents/agent-linux |

| Logstash | https://docs.microsoft.com/en-us/azure/sentinel/connect-logstash |

| Fluent-bit | https://docs.fluentbit.io/manual/pipeline/outputs/azure |

| Kubernetes Logging: Comparing Fluentd vs. Logstash |