You are viewing an old version of this page. View the current version.

Compare with Current

View Page History

« Previous

Version 10

Next »

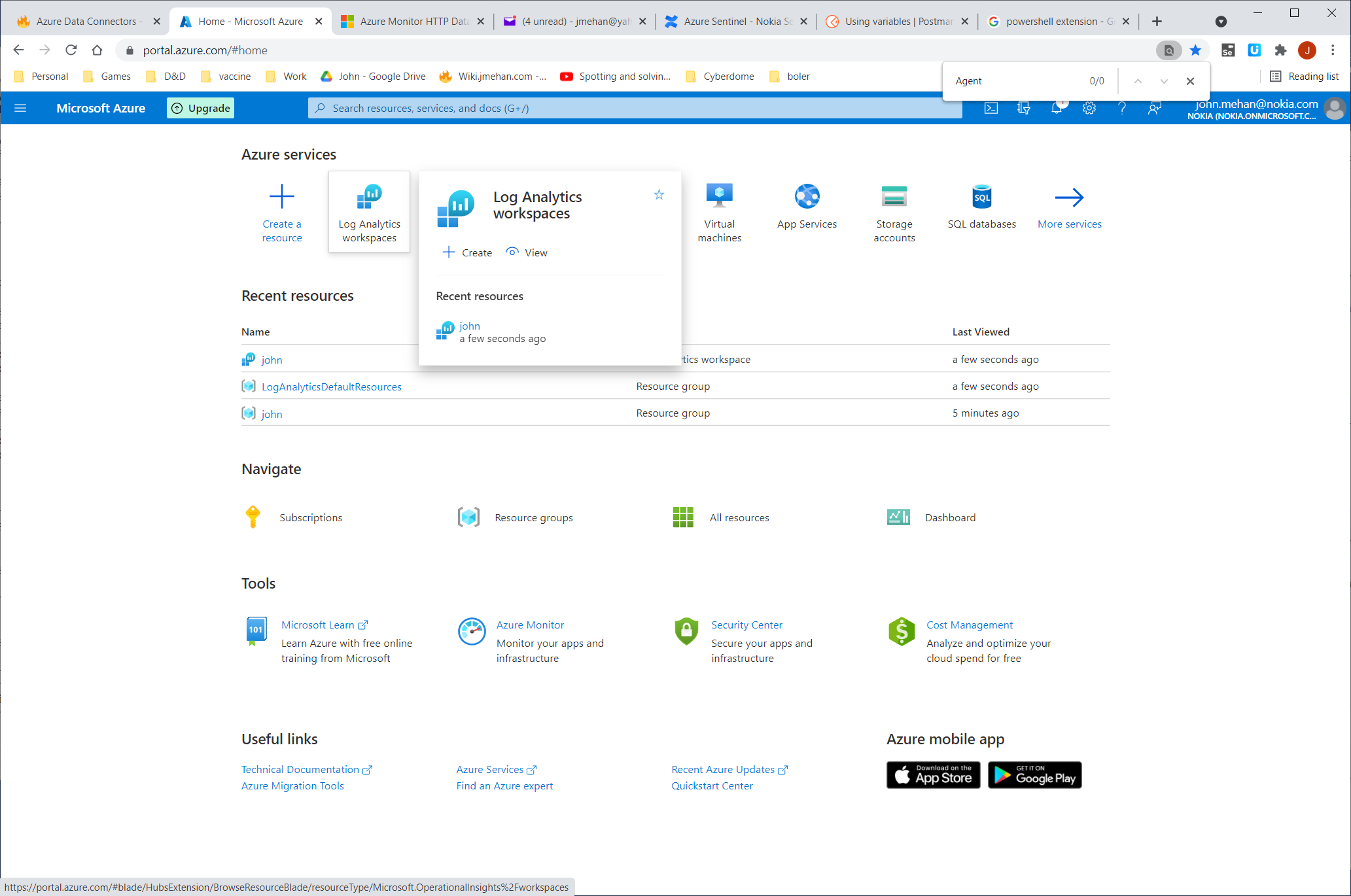

Your Credentials

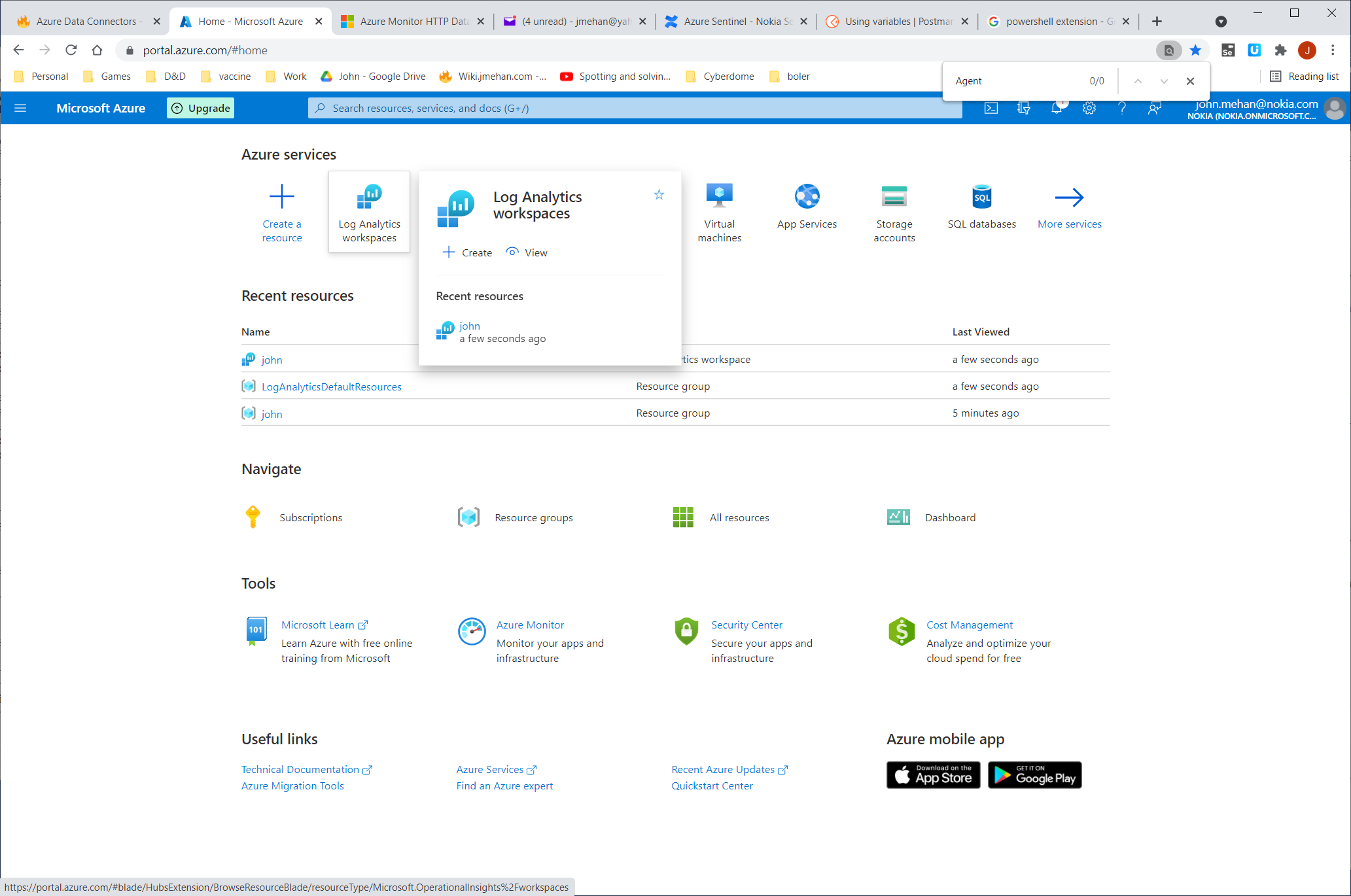

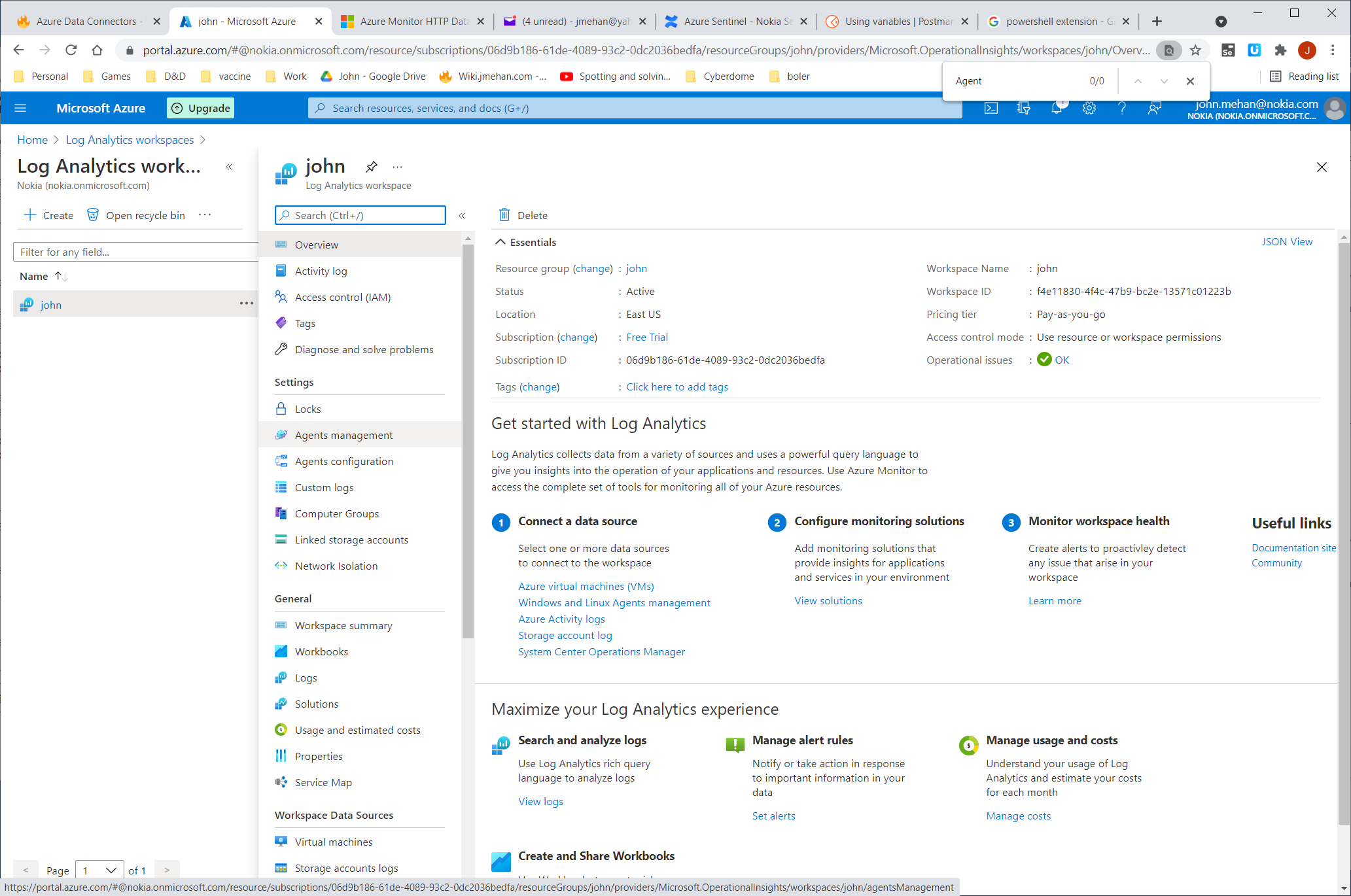

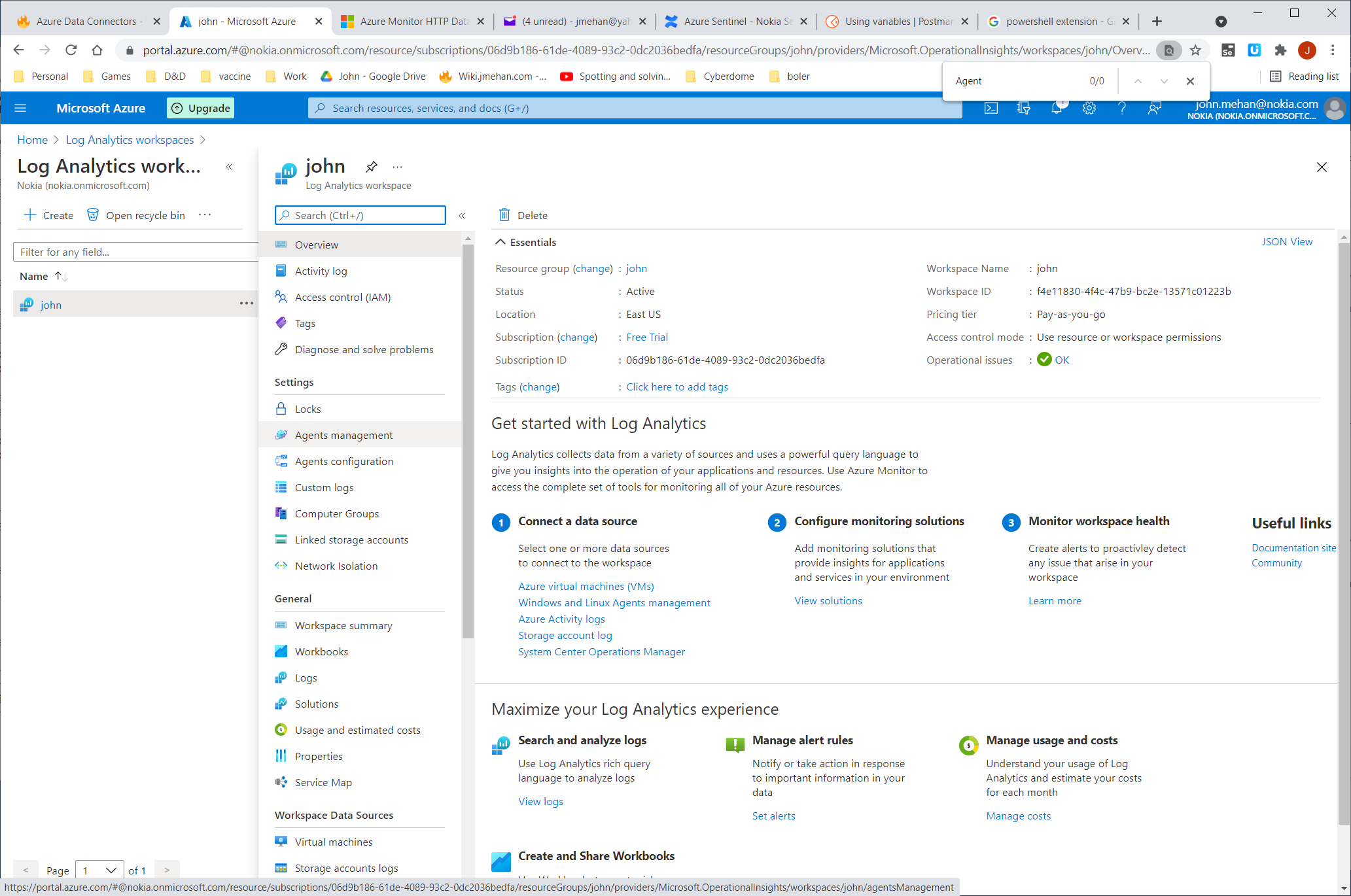

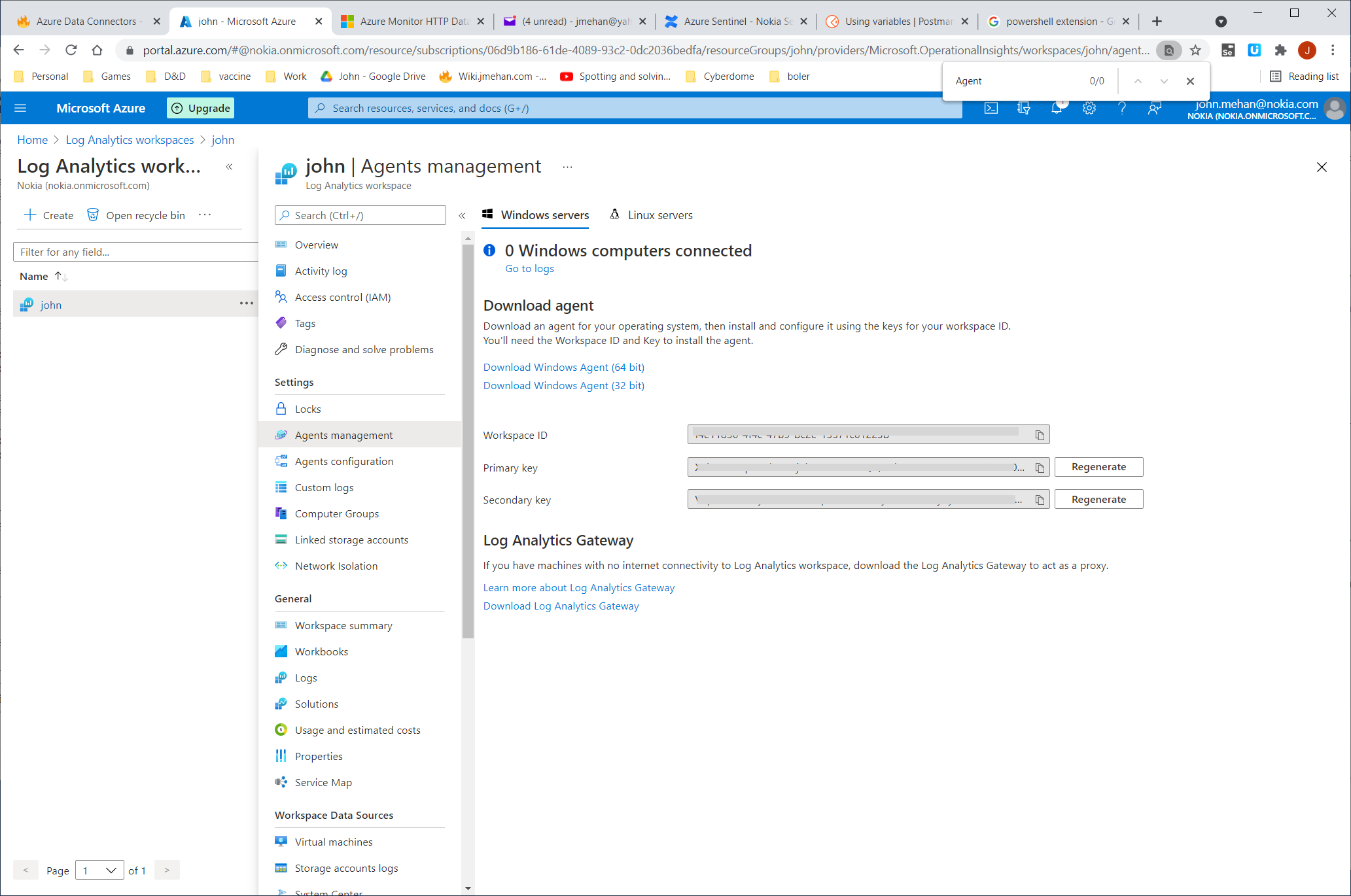

To determine your credentials in Azure:

- locate your Log Analytics workspace.

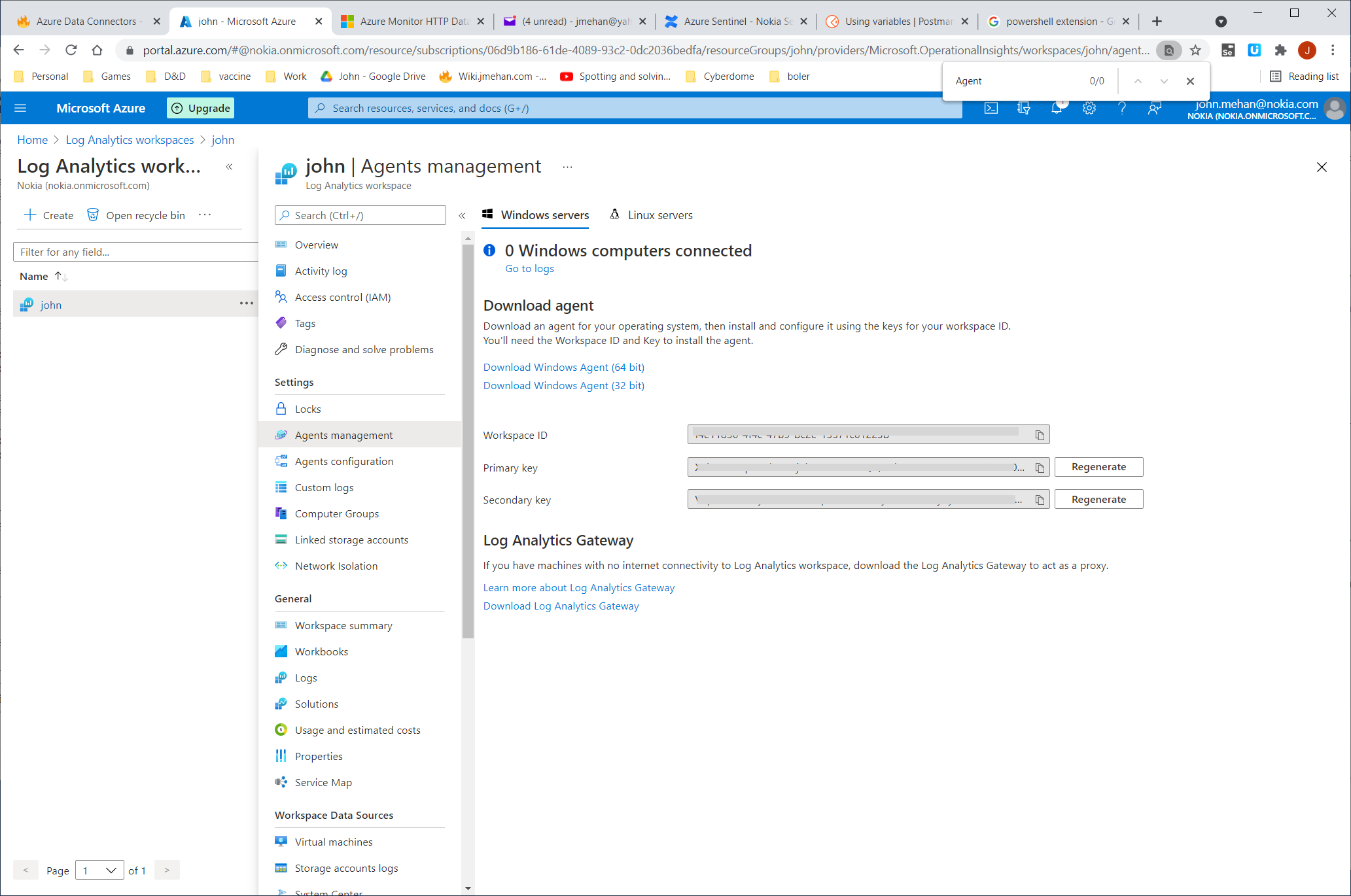

- Select Agents management.

- To the right of Workspace ID, select the copy icon, and then paste the ID as the value of the Customer ID variable.

- To the right of Primary Key, select the copy icon, and then paste the ID as the value of the Shared Key variable.

Azure HTTP Data Collector API

https://docs.microsoft.com/en-us/azure/azure-monitor/logs/data-collector-api

Sample powershell script to push data to your workspace.

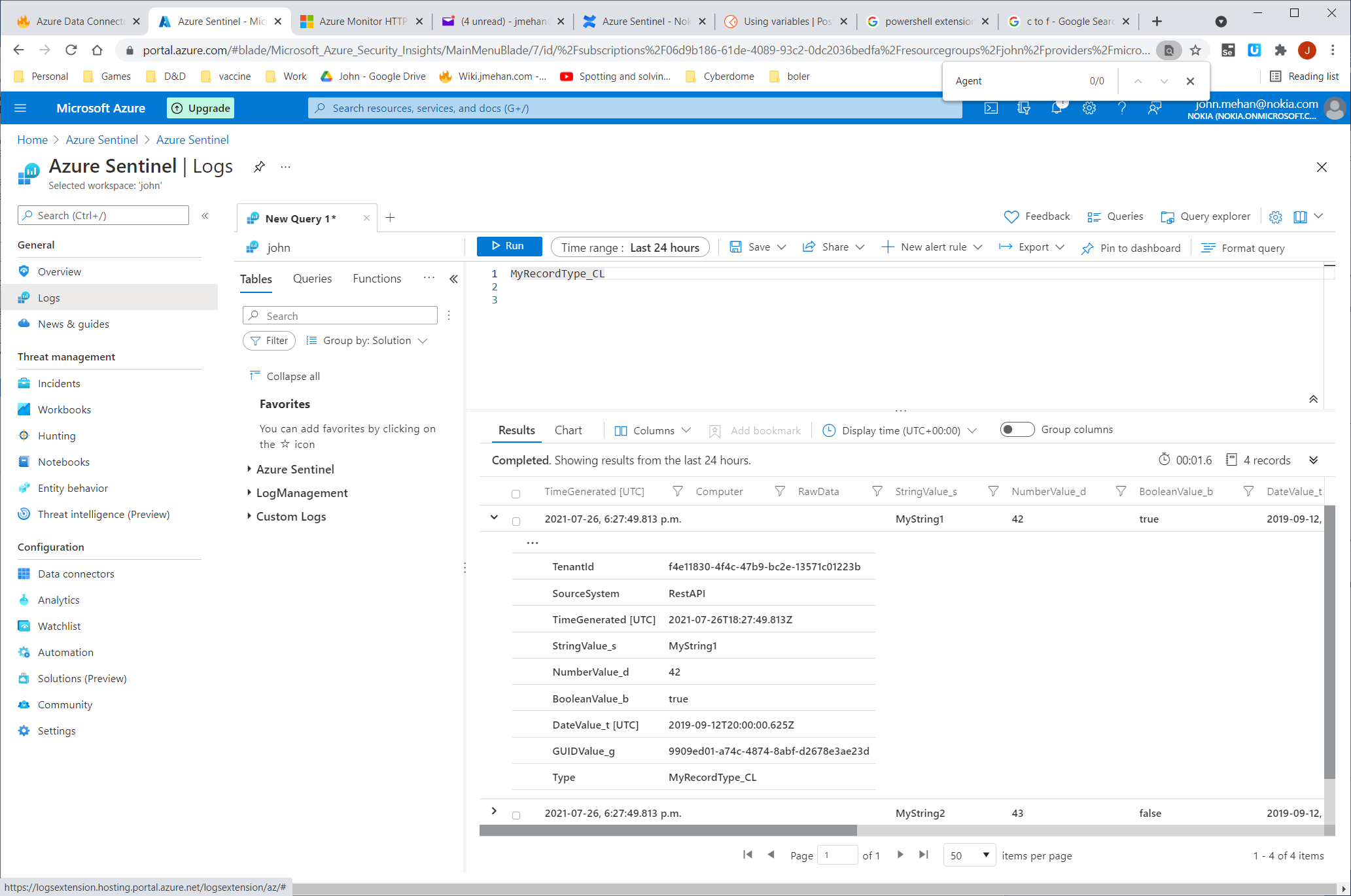

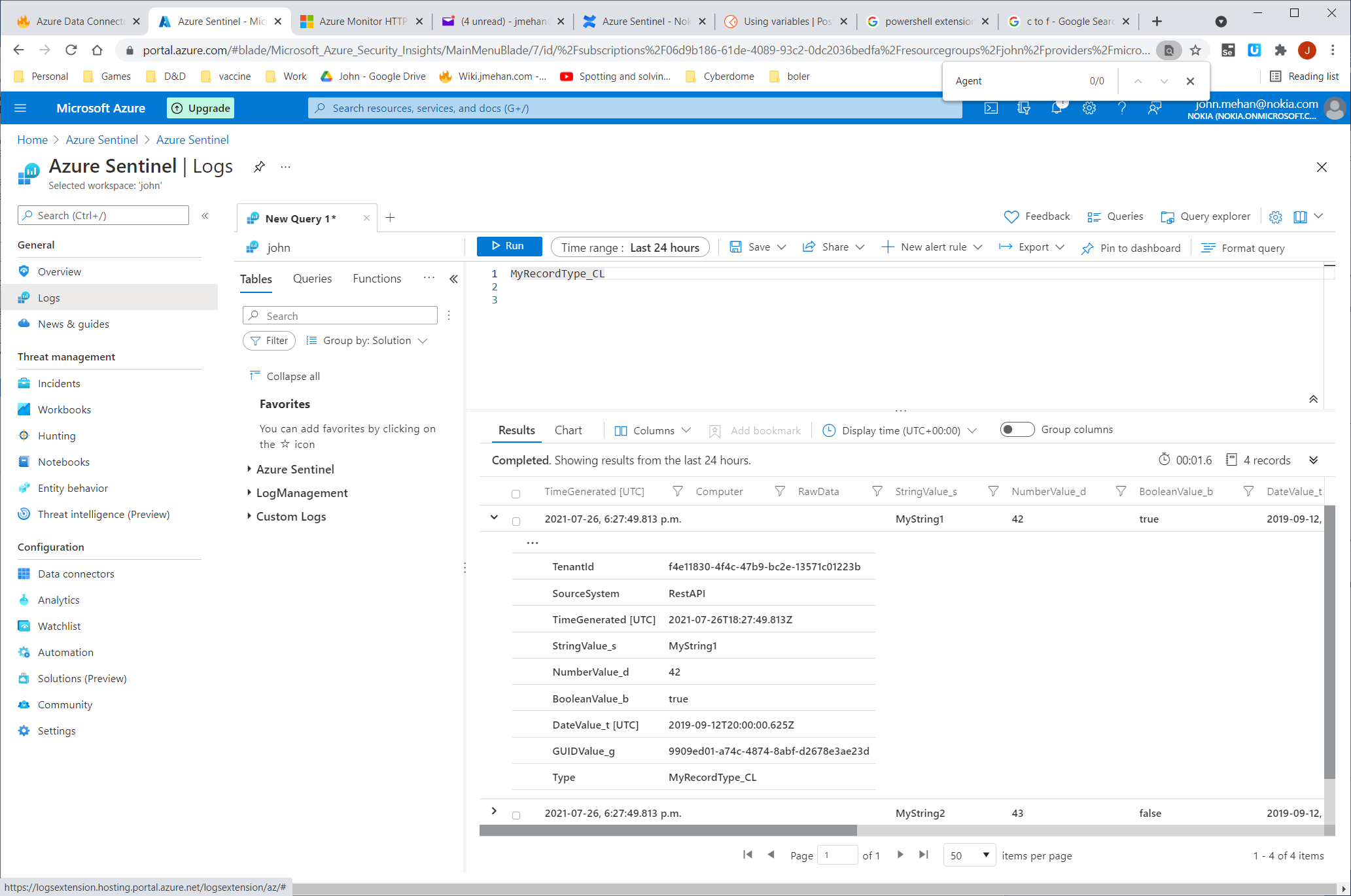

LogType: MyRecordType_CL

# Replace with your Workspace ID

$CustomerId = "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx"

# Replace with your Primary Key

$SharedKey = "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

# Specify the name of the record type that you'll be creating

$LogType = "MyRecordType"

# You can use an optional field to specify the timestamp from the data. If the time field is not specified, Azure Monitor assumes the time is the message ingestion time

$TimeStampField = ""

# Create two records with the same set of properties to create

$json = @"

[{ "StringValue": "MyString1",

"NumberValue": 42,

"BooleanValue": true,

"DateValue": "2019-09-12T20:00:00.625Z",

"GUIDValue": "9909ED01-A74C-4874-8ABF-D2678E3AE23D"

},

{ "StringValue": "MyString2",

"NumberValue": 43,

"BooleanValue": false,

"DateValue": "2019-09-12T20:00:00.625Z",

"GUIDValue": "8809ED01-A74C-4874-8ABF-D2678E3AE23D"

}]

"@

# Create the function to create the authorization signature

Function Build-Signature ($customerId, $sharedKey, $date, $contentLength, $method, $contentType, $resource)

{

$xHeaders = "x-ms-date:" + $date

$stringToHash = $method + "`n" + $contentLength + "`n" + $contentType + "`n" + $xHeaders + "`n" + $resource

$bytesToHash = [Text.Encoding]::UTF8.GetBytes($stringToHash)

$keyBytes = [Convert]::FromBase64String($sharedKey)

$sha256 = New-Object System.Security.Cryptography.HMACSHA256

$sha256.Key = $keyBytes

$calculatedHash = $sha256.ComputeHash($bytesToHash)

$encodedHash = [Convert]::ToBase64String($calculatedHash)

$authorization = 'SharedKey {0}:{1}' -f $customerId,$encodedHash

return $authorization

}

# Create the function to create and post the request

Function Post-LogAnalyticsData($customerId, $sharedKey, $body, $logType)

{

$method = "POST"

$contentType = "application/json"

$resource = "/api/logs"

$rfc1123date = [DateTime]::UtcNow.ToString("r")

$contentLength = $body.Length

$signature = Build-Signature `

-customerId $customerId `

-sharedKey $sharedKey `

-date $rfc1123date `

-contentLength $contentLength `

-method $method `

-contentType $contentType `

-resource $resource

$uri = "https://" + $customerId + ".ods.opinsights.azure.com" + $resource + "?api-version=2016-04-01"

$headers = @{

"Authorization" = $signature;

"Log-Type" = $logType;

"x-ms-date" = $rfc1123date;

"time-generated-field" = $TimeStampField;

}

$response = Invoke-WebRequest -Uri $uri -Method $method -ContentType $contentType -Headers $headers -Body $body -UseBasicParsing

return $response.StatusCode

}

# Submit the data to the API endpoint

Post-LogAnalyticsData -customerId $customerId -sharedKey $sharedKey -body ([System.Text.Encoding]::UTF8.GetBytes($json)) -logType $logType

Query this Data in Logs -

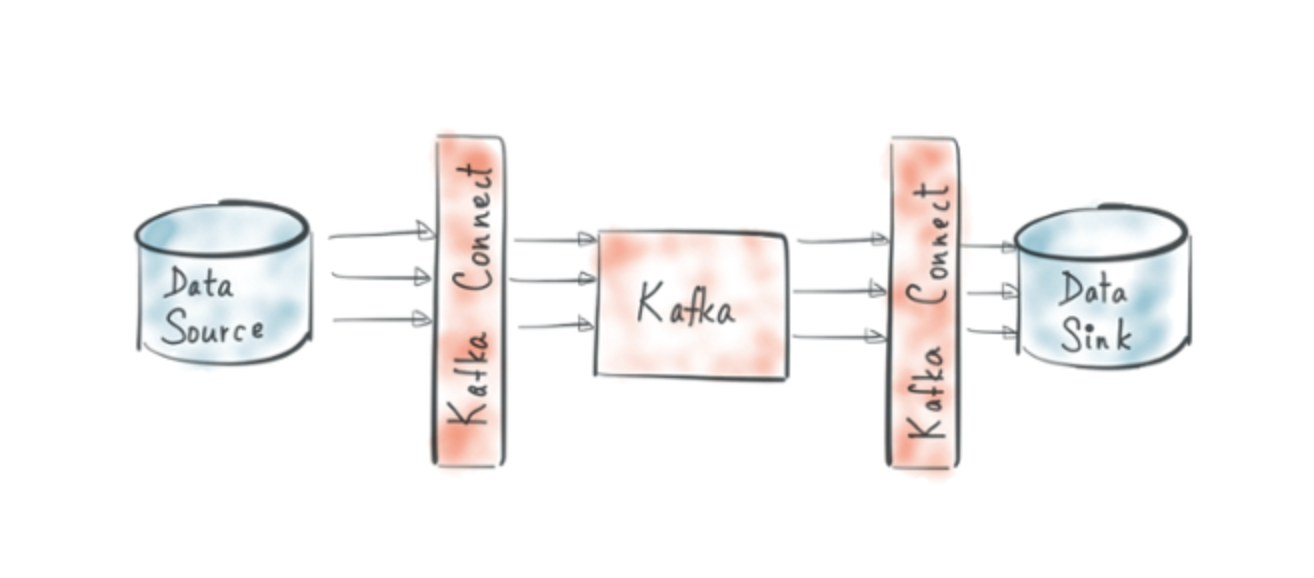

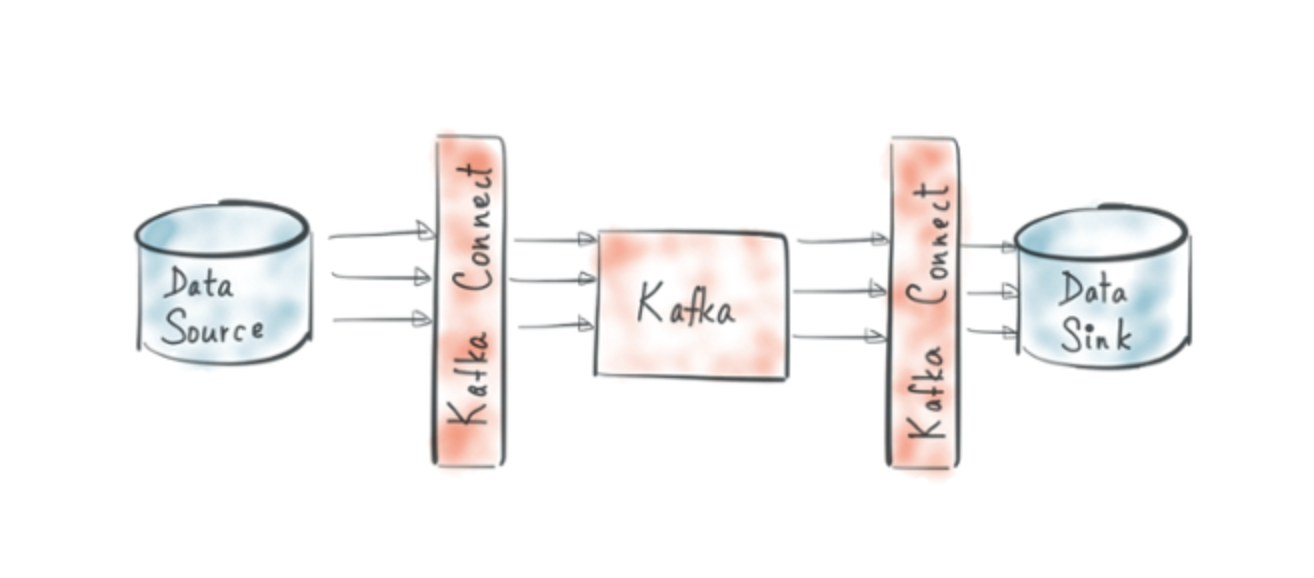

Kafka Connect with Azure Log Analytics Sink Connector

https://www.confluent.de/hub/chaitalisagesh/kafka-connect-log-analytics

Log Analytics Agent for Linux

https://docs.microsoft.com/en-us/azure/azure-monitor/agents/agent-linux

Pushes data to Azure Data Collector API.

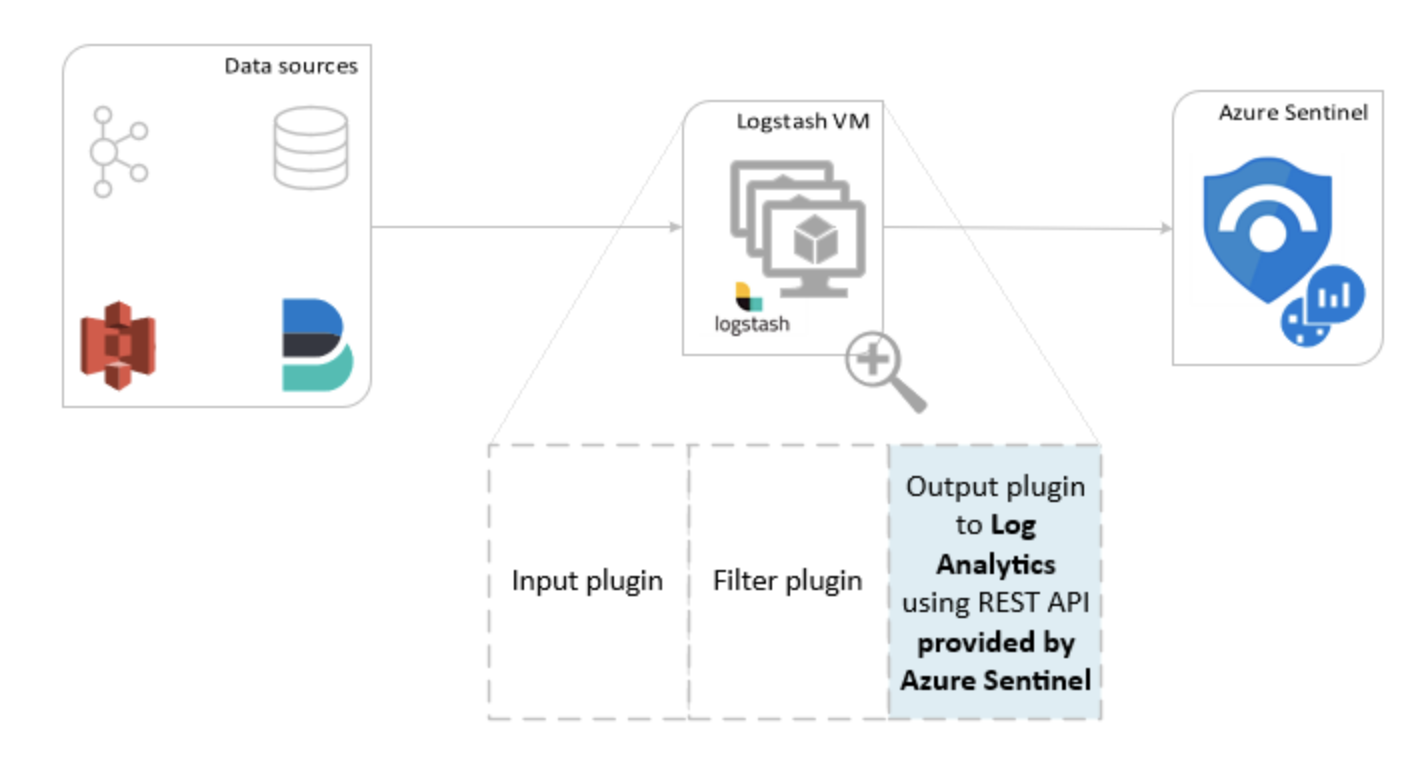

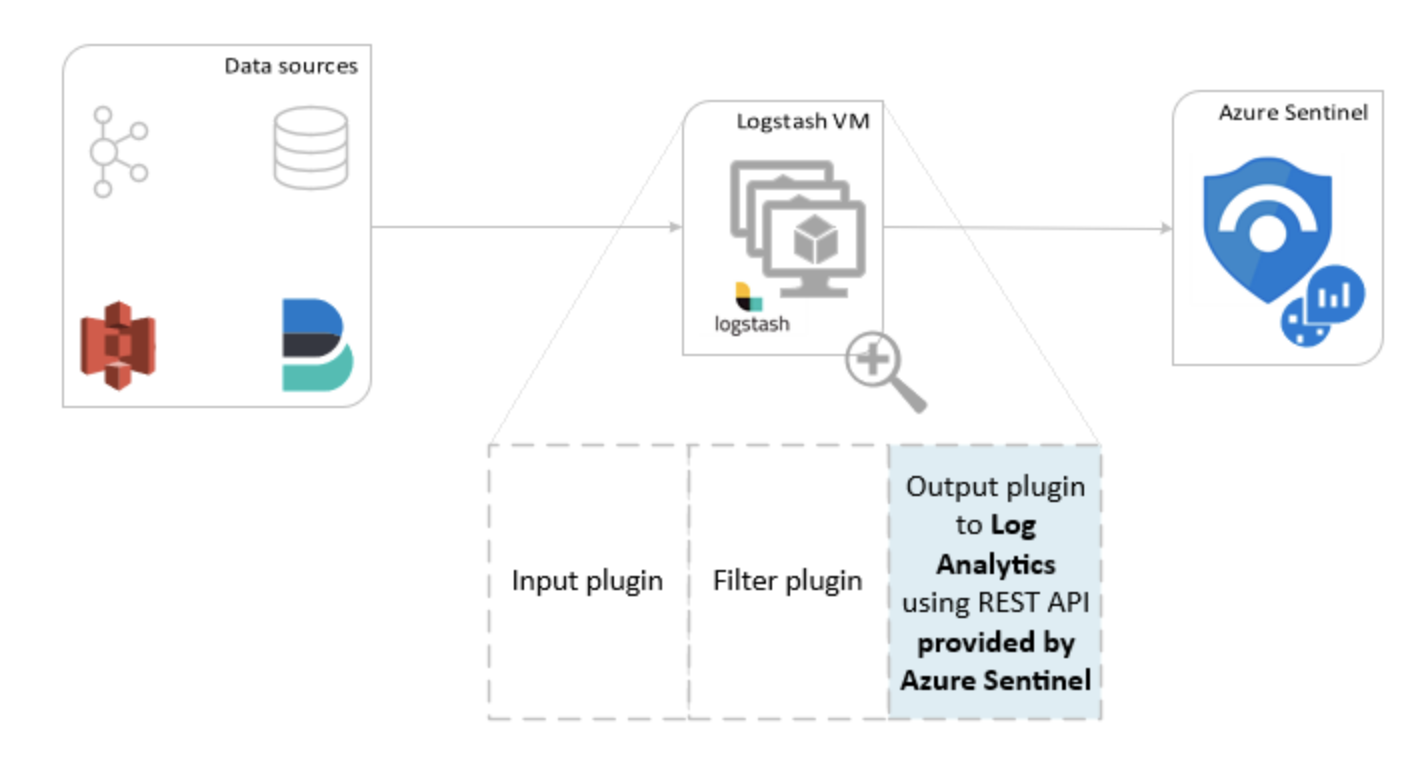

Logstash

https://docs.microsoft.com/en-us/azure/sentinel/connect-logstash

Pushes data to Azure Data Collector API.

"The components for log parsing are different per logging tool. Fluentd uses standard built-in parsers (JSON, regex, csv etc.) and Logstash uses plugins for this. This makes Fluentd favorable over Logstash, because it does not need extra plugins installed, making the architecture more complex and more prone to errors"

Fluent-bit

https://docs.fluentbit.io/manual/pipeline/outputs/azure

Pushes data to Azure Data Collector API.

References