Overview

Apache Kafka is an open source project for a distributed publish-subscribe messaging system rethought as a distributed commit log.

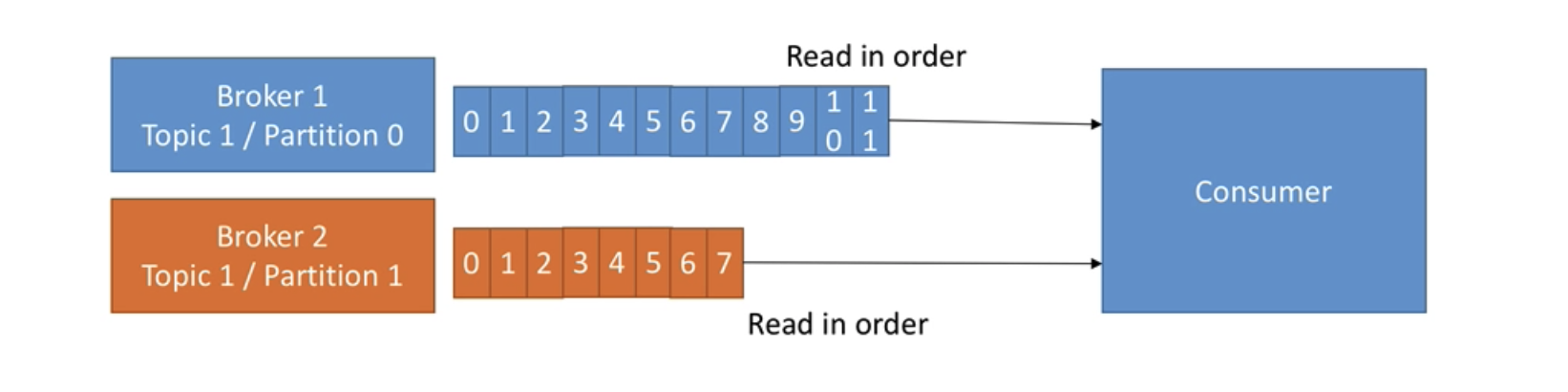

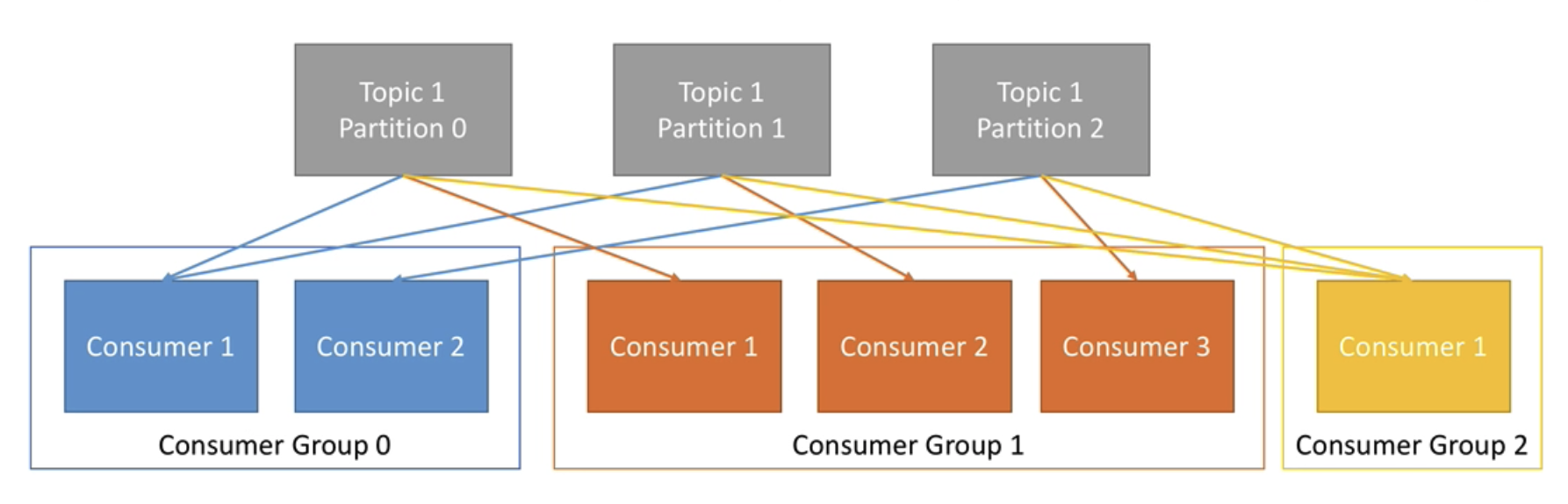

Kafka stores messages in topics that are partitioned and replicated across multiple brokers in a cluster. Producers send messages to topics from which consumers read.

Created by LinkedIn and is now an Open Source project maintained by Confluent.

Kafka Use Cases

Some use cases for using Kafka:

- Messaging System

- Activity Tracking

- Gathering metrics from many different sources

- Application Logs gathering

- Stream processing (with the Kafka Streams API or Spark for example)

- De-coupling of system dependencies

- Integration with Spark, Flink, Storm, Hadoop and many other Big Data technologies

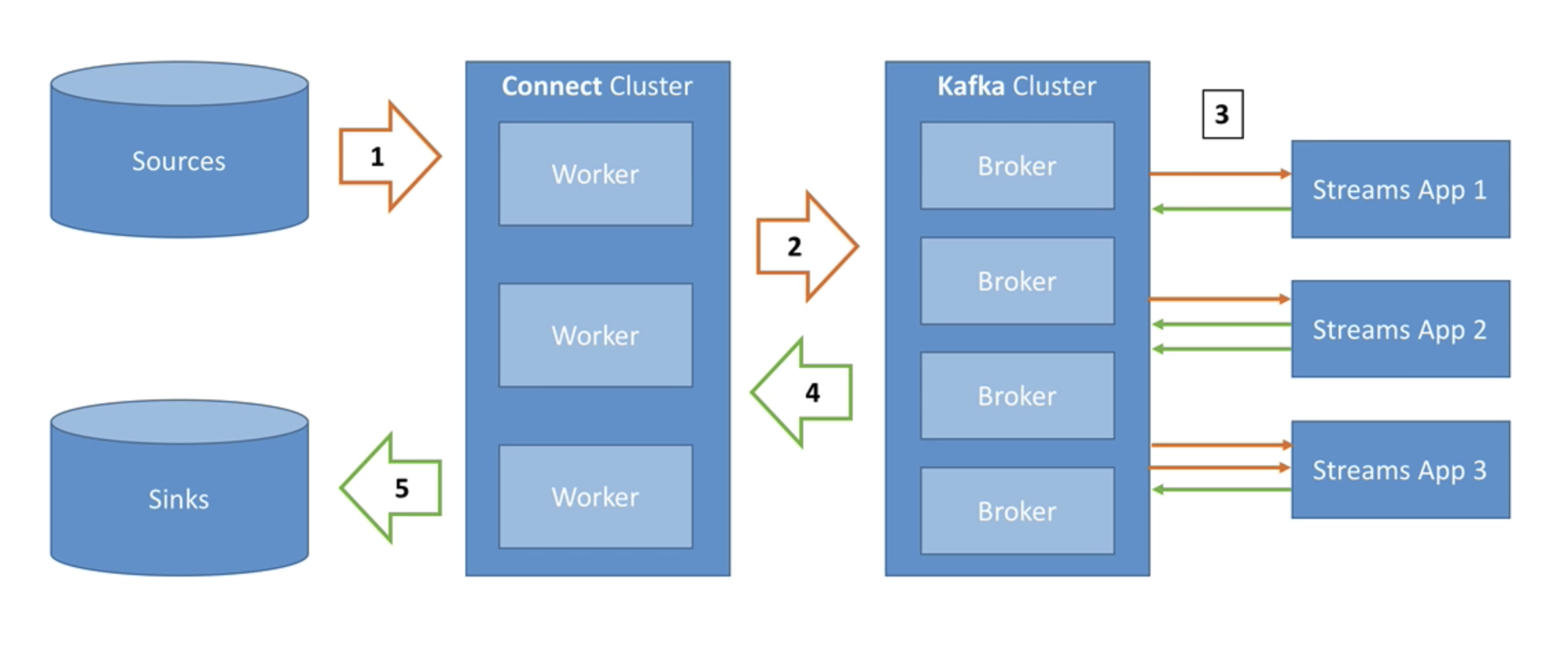

Architecture

- Source Connectors pull data from sources

- Data is sent to Kafka cluster

- Transformation of topic data into another topic can be done with Streams

- Sink Connectors in Connect cluster pull data from Kafka

- Sink Connectors push data to sinks

Kafka

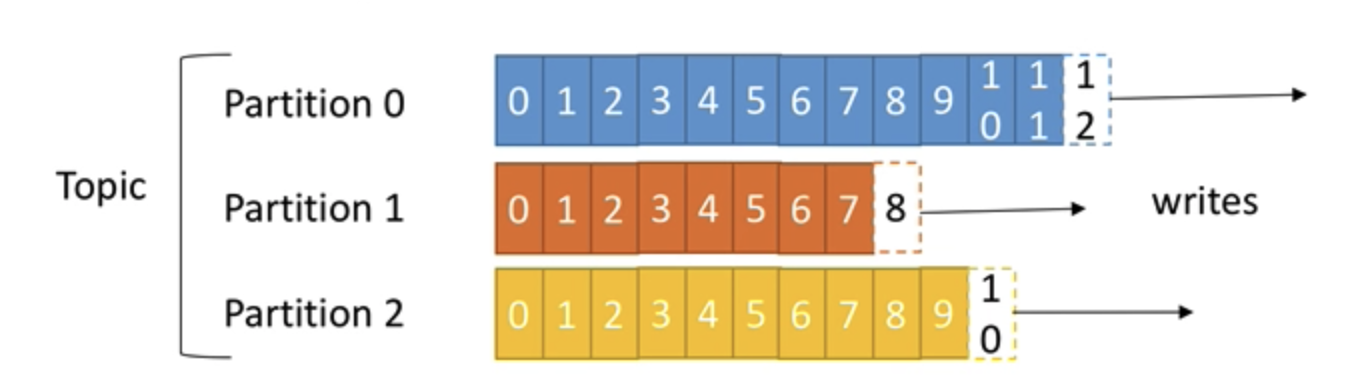

Topics and Partitions

Topics: a particular stream of data

- similar to a table in a database(without constraints)

- you can have as many topics as you want

- a topic is identified by it's name

Topics are split into partitions

- each partition is ordered

- each message with a partition gets an incremental id, called offset.

- offsets are only relevant for a particular partition

- order is guaranteed only in a partition (not across partitions)

- data is assigned to a random partition unless a key is provided

- you can have as many partitions per topic as you want

- specifying a key, ensures that your message is written to the same partition (which ensures order).

Kafka Brokers

Consumers (still relevant? - moved to Kafka Connect?)

Installation on Kubernetes

Installing Kafka Cluster

We are using the bitnami helm chart:

$ helm repo add bitnami https://charts.bitnami.com/bitnami

"bitnami" has been added to your repositories

$ helm install kafka bitnami/kafka

NAME: kafka

LAST DEPLOYED: Tue Jul 27 11:19:47 2021

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

** Please be patient while the chart is being deployed **

Kafka can be accessed by consumers via port 9092 on the following DNS name from within your cluster:

kafka.default.svc.cluster.local

Each Kafka broker can be accessed by producers via port 9092 on the following DNS name(s) from within your cluster:

kafka-0.kafka-headless.default.svc.cluster.local:9092

To create a pod that you can use as a Kafka client run the following commands:

kubectl run kafka-client --restart='Never' --image docker.io/bitnami/kafka:2.8.0-debian-10-r55 --namespace default --command -- sleep infinity

kubectl exec --tty -i kafka-client --namespace default -- bash

PRODUCER:

kafka-console-producer.sh \

--broker-list kafka-0.kafka-headless.default.svc.cluster.local:9092 \

--topic test

CONSUMER:

kafka-console-consumer.sh \

--bootstrap-server kafka.default.svc.cluster.local:9092 \

--topic test \

--from-beginning

$ kubectl get pods

We can see that pods are deployed:

$ kubectl get pods NAME READY STATUS RESTARTS AGE kafka-0 1/1 Running 3 3h9m kafka-zookeeper-0 1/1 Running 0 3h9m

Installing Kafka Connect Cluster

...

References

| Reference | URL |

|---|---|

| Apache Kafka in 5 minutes | https://www.youtube.com/watch?v=PzPXRmVHMxI |

| Kafka Topics, Partitions and Offsets Explained | https://www.youtube.com/watch?v=_q1IjK5jjyU |

| Kafka Helm Chart | https://bitnami.com/stack/kafka/helm |

| Kafka Helm Chart Source | https://github.com/bitnami/charts/tree/master/bitnami/kafka/#installing-the-chart |